Technical SEO is a deep abyss – there are infinite numbers of problems that crop up in crawling, indexing and ranking a site. There are numerous factors and variables that affect how Google sees your pages and presents them to users.

With this article, we are starting a new series under which we will examine a specific problem in technical SEO and discuss solutions to that problem.

Before we begin, for the sake of clarity, let’s understand the three basic components of search engine optimization:

- Technical SEO: This is where SEO specialists and programmers collaborate to solve problems related to crawling, rendering and indexing of various pages on the site.

- On-Page SEO and EEAT: These tasks involve optimizing the various elements, including text, images and buttons on a web page, so they need a collaboration of SEO specialists, UI/UX pros, content creators, copywriters and conversion optimizers.

- Off-Page SEO: This involves stuff like link building, digital PR and content syndication. So outreach experts and link builders are your go-to people here.

Based on these components, we have created a pyramid that describes how SEO works for Google:

Types of Problems in Technical SEO

As is obvious from the pyramid, SEO is such a vast field that we need to take it step by step. Technical SEO forms one half of Level 2 of the pyramid. This series focuses on the technical problems that SEOs generally face at this level. These issues can be grouped into the following categories:

1. Site and Page Indexing

When all your pages are indexed by Google, they will show up in the SERPs. If they are not indexed at all, there is no chance they get any visibility for any queries. The common reasons why pages aren’t indexed are

- incorrect XML sitemaps

- mistakes in the robots.txt file

- meta noindex and nofollow attributes in the HTML header

- Googlebot being unable to render pages because of unsupported code

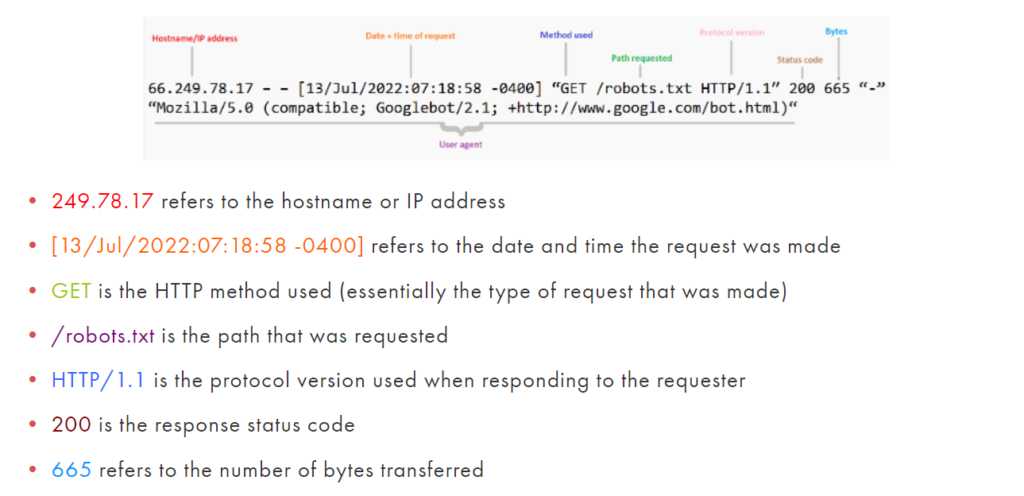

Most of the problems can be solved by viewing log files, analyzing HTTP status such as 2xx (OK), 4xx (page not found) and 5xx (server error).

2. Site Structure and Duplicate Content

Duplicate content occurs when Google finds the same content on different pages. Each page is technically located at a unique URL. Your URL structure has to conform to some widely accepted standards, in order to make it easy for search engine bots to crawl and index your site.

3xx (redirects) HTTP codes, canonicalization, pagination, HTTP and HTTPS protocols, subdomains created for various purposes, site mirrors, and URL parameters are the various factors that affect your site structure.

3. Page Loading Speed

Page loading speed is an acknowledged ranking factor in Google since 2018. Availability of mobile pages, existence of AMP pages, asynchronous site loading, dynamic scripts in JavaScript and jQuery, various elements of the web page, all affect the page loading speed.

Page speed is part of Google’s page experience signals. A related signal is Core Web Vitals, which involves checking not only how fast your site loads but also how soon your users can interact with it.

4. Geographic Targeting

Setting the wrong region or country on Google properties such as Search Console, Google Analytics and Google Ads and ignoring guidelines on international SEO such as HREFlang attributes can lead to major problems – the core audience you’re targeting may not see your content at all!

5. Security

Security is another acknowledged ranking factor in the Google search algorithm, especially the usage of HTTPS for your website. Security is important at every level – without it, you lose trust very quickly. You need to make sure your CMS and web server is protected from viruses, ransomware, hackers, distributed denial of service (DDoS) attacks. If you don’t focus on preventing security lapses, at some point your site might be unavailable or unusable for indefinite periods of time, posing a terminal risk for your business.

Causes of Page Indexing Problems

There is a precise funnel and pages through which pages on websites get into the Google index, before they show up in the search results.

This involves three key phases:

- Google learns about or “discovers” the page. The page is then queued for crawling by Googlebot.

- Googlebot can “render” the page without any difficulty. If there are any code, structure, or duplication problems in rendering, then Google will not crawl the page.

- If the page can be crawled frequently and rendered without any problems, it is added to the index. The page will then show up in search results if it matches the intent of queries.

In order to know the issue that is affecting the crawling and indexing of your pages, you need to understand this funnel very precisely. Only then can you identify exactly which stage the problem is occurring.

Problem 1: Google Isn’t Discovering Your Pages

What if Google is not even aware of your pages? What if it isn’t crawling the site? What do you do?

To learn more about how Googlebot goes through your site, you can check your server logs. How frequently is it visiting the site? Which pages is it crawling and which pages are left out?

Once you understand the indexing process and compare it with how Googlebot goes through your site, you can use the following ideas to speed up crawling of your pages:

- Create an XML sitemap with crawling priorities and keep updating it as new pages are added to your site.

- Make sure your robots.txt file is perfectly configured and that each section of your site is allowed or disallowed as you want.

- Get your internal linking right. Don’t link haphazardly. Rather, use a hub-and-spoke strategy to link both top-down and bottom-up along the informational structure of your website.

- Try to attract incoming web traffic from other websites. Share your pages on social media and get your digital PR machine going.

- Build external links to your hub pages from relevant and high-authority sites. From there, you could link to the relevant pages. This way, your pages get discovered, crawled and indexed faster, and more domain authority will flow through to them.

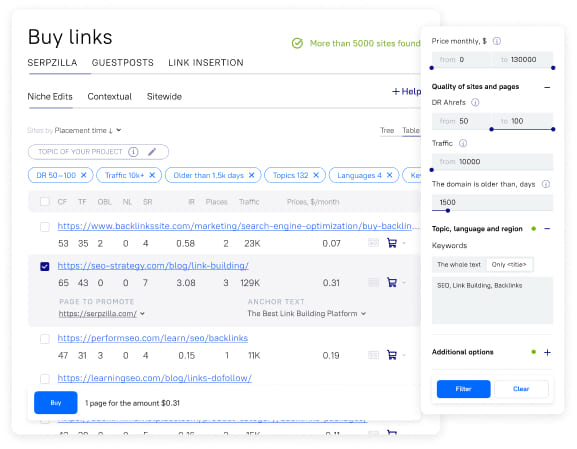

Serpzilla can be your friend here. You can quickly build links with a range of domain authority scores (for increased trust) as well as from a region of your choice (for increased relevance) and Googlebot will immediately follow through from those sites. You can also go for a tiered backlink structure as per your budget and strategy.

Problem 2: Google Is Crawling But Not Indexing Your Pages

Many times, a lot of your pages are crawled but not indexed. You can manually check any URL to see if it is indexed using the Google Search Console. If not, the shortest route to getting it indexed is the “Request Indexing” button:

You can take further steps to get your URLs indexed:

1. See the Indexing > Pages report in Google Search Console to identify which pages are not indexed and why. This report helps you identify all your non-indexed pages with the precise reason or problem that is affecting them.

You can solve these reasons one by one according to their priority and the number of pages affected.

2. Check all the HTTP response codes, directives, and usual suspects that are easy to overlook. Have you closed your meta tags? Are you using the correct syntax in robots.txt? Have you used the correct URLs in the rel=’canonical’ attribute?

3. Verify that the content on the page is high-quality. If there is very less unique content on the page or if it is repetitive or generalized, or worse – if it is AI-generated or copied, there is no chance your page will be indexed anytime soon. Follow the latest EEAT guidelines and cover more unique topics on the page if necessary.

4. A very important point is, the UX of the page must be intuitive and seamless. If there are any problems in mobile friendliness, rendering, interactivity, stability or consistency of the page, it will fail the Core Web Vitals test and not get indexed.

5. Finally, do give a thought to the “demand” or search intent of the topic of the page. Is anyone searching for it? Does the content really solve a problem or answer a question? If people don’t need it, Google doesn’t either.

Content Pruning: Remove Your Bad Pages

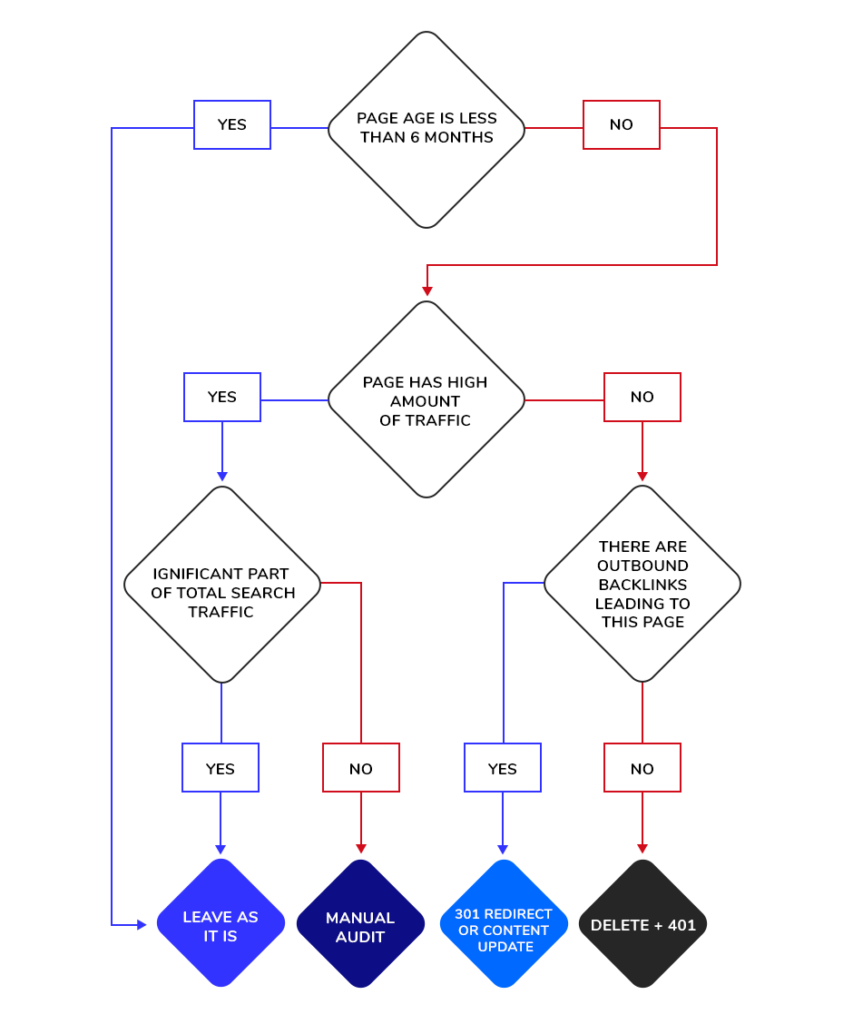

What is the best way to deal with pages that have zero traffic? Just delete them.

Auditing your content and removing obsolete pages from the index regularly will make Google crawl, index and cache your newer and important pages more frequently. If you have a large and complex site that is facing problems in indexing and ranking, it makes sense to clean up unwanted pages that are cannibalizing the rankings of your pillar content.

The logic is quite simple: When you have fewer unnecessary low-quality or duplicate pages, the overall ratio of high-quality pages on your site improves. Consequently, the following things improve:

- Domain authority and trustworthiness of your site

- Average time spent by users on your page

- Browsing depth, or number of pages users visit in per session

- Conversion rates

- Crawl time and frequency of Googlebot

There are different ways to request (or induce) removal of your pages from the Google index:

- Use the “Disallow” directive in robots.txt to stop Google from crawling certain sections or URLs of your site in the first place.

- Add the meta “noindex” tag to the HTTP header. The next time Google crawls the URL, it will remove the page from the index.

- Simply delete the page from your server. Google will remove it from the cache and index after a few days.

- Permanently redirect (301) the page to one with updated content if you have any.

- The most direct way is to request removal using the Removals Tool in the Google Search Console.

Here’s a flowchart to help you decide if cleaning pages from the index is necessary for you or not:

A caveat: If you don’t have major problems in indexing and ranking, and if you’re sure of the quality and relevance of your content, then pruning doesn’t provide a noticeable effect. In such cases, the juice is not worth the squeeze.

Boost your SEO results! Link building has become fast and easy with Serpzilla. Buy quality backlinks on authority websites with high DR.

Other SEO Problems?

We hope this article addresses all the issues you might be facing in indexing your pages. Stay tuned to our Technical SEO Problem Solving series for more solutions and strategies in optimizing your pages for better rankings!