Imagine this scenario.

You uploaded several content pieces on your website. Days pass. And yet, you aren’t able to view any significant results related to the search engine ranking of the website.

Now imagine this scenario.

You had several pages already showing up on the SERPs. One fine day, they’re gone. Just like that.

In the first scenario, your pages were not indexed by search engines. In the second one, chances are high that your pages fell out of the search engine index.

What is search engine indexing?

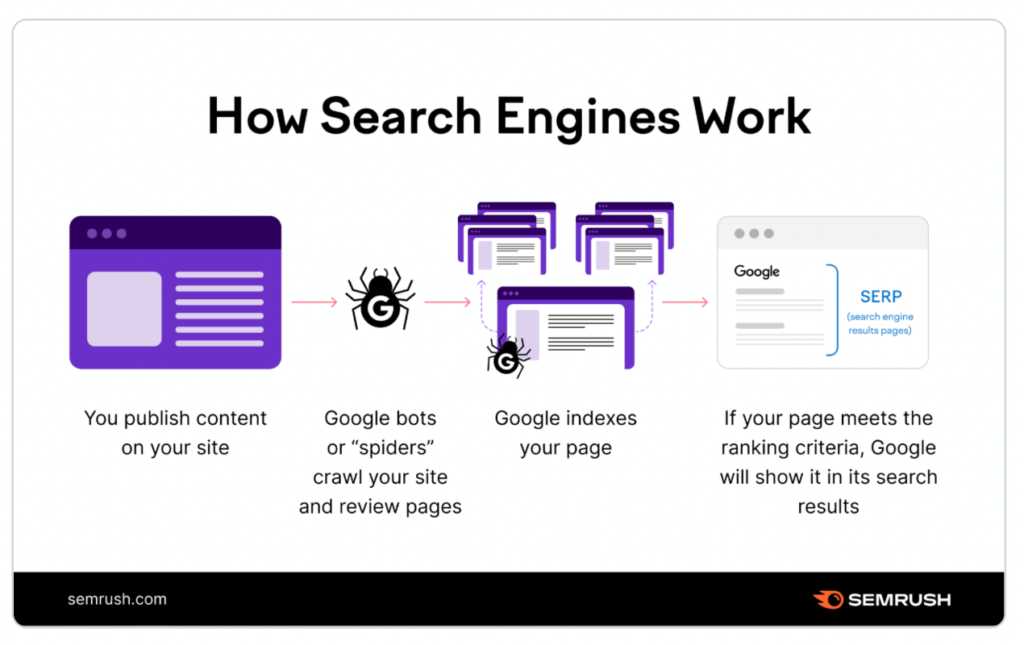

Search engines like Google use a three-pronged approach to deliver the most relevant results.

- Crawling – bots discover and scan websites via embedded links.

- Indexing – bots save the information in a data storage system leading to indexing.

- Ranking and Serving – here the SERP is produced listing the most relevant web pages.

Indexing therefore, is a critical aspect when it comes to SEO. It makes sure that the web pages are stored in the search engine databases and is accessible for organic traffic. During the process, the search engine takes into account all factors including the text, multimedia, meta tags, links, and quality of the content etc.

On the other hand, non-indexed pages don’t appear on the SERPs. This adversely affects the visibility and traffic potential. This may happen due to various reasons.

How To Check If A Page Is Indexed In Google

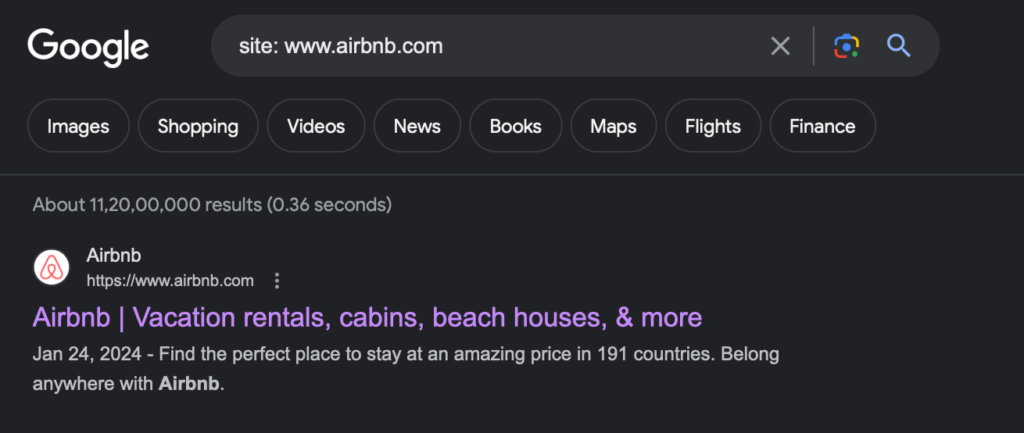

To run a quick check on whether a page is indexed in Google or now, here are some of the best steps:

Step 1: Use the search operator “site:” or “inurl:” before the Google page URL. For example, to check if the page https://www.example.com/mypage.html is in Google’s index, do a Google search like this: “site:www.example.com/mypage.html” or “inurl:www.example .com/mypage.html”. If the page is in Google’s index, it should appear in search results.

Step 2: Sign in to your Google Search Console account and go to the Coverage page. Then select the All Pages or Blocked Resources section to see a list of all the pages on your site that have been indexed by Google.

How Google Indexing Works

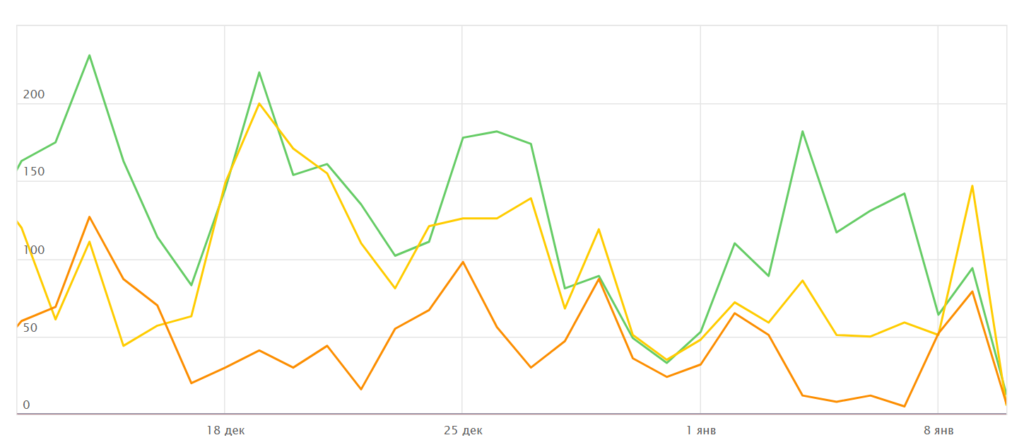

Indexing in a search engine occurs in several stages and represents a kind of funnel:

- Page Discovery: Search engines discover new pages through methods like following links from already indexed pages, utilizing sitemaps, acquiring URLs from webmasters, and systematically crawling the vast expanse of the Internet. E.g. A cycle brand can be discovered by Google from a listicle of “Best Christmas Gifts 2024.”

- Browsing and Crawling Content: Upon detection, the search engine thoroughly crawls the content of the page, encompassing text, images, video, audio, and other elements. It also scrutinizes the HTML structure, metadata, headings, and various factors that contribute to the page’s overall composition. If the page has these elements missing, chances are high that the web page will not get indexed.

- Indexing: Post content examination, the search engine inserts information about the page into its extensive search index—a comprehensive database of billions of web pages. During the process, the search engine establishes backlinks to the page, identifies keywords and phrases, categorizes content, and generates other records crucial for subsequent searches.

- Ranking: When users initiate search queries, the search engine leverages its index to determine the most pertinent results. This involves matching keywords and phrases from the query with the information stored in the index. Results are then ranked using diverse algorithms, taking into account factors such as content relevance, authority, and page popularity.

In each of these stages, there are chances that the pages can go missing from the index. Remember, indexing is a continuous and consistent activity. The index is updated periodically and the changes get reflected on the SERPs.

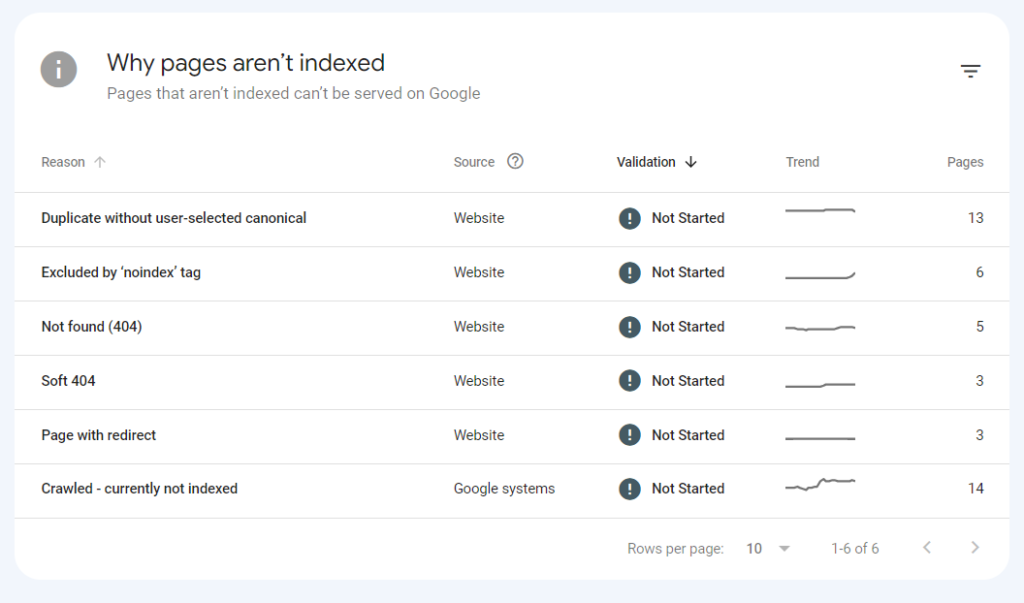

Note: You can find the reason why your web page is missing from the index with the help of Google Search Console.

Let’s take a look at two critical points to understand indexing in-depth:

Google Supplemental (additional Google index)

Google introduced the Google Supplemental, incorporating it into the 200 supplemental index—a distinct index for pages falling short of Google’s main index standards. These pages were identified as less relevant, lacking adequate information, or duplicative.

The primary objective of the Supplemental Index was to assist Google in maintaining the cleanliness and currency of its main index by excluding subpar or duplicate pages from primary search results. Criticism arose against the 200 supplemental index, highlighting its occasional failure to accurately assess page relevance, potentially resulting in the exclusion of valuable content from search results.

In 2007, Google declared the discontinuation of public display for the Supplemental Index, declaring that all pages would be considered part of the main index. Google shifted its focus towards refining indexing and ranking methods to deliver users the most pertinent search results.

Consequently, the concept of “Google Supplemental” has become obsolete, given that Google no longer upholds a distinct supplementary index.

Google Cache

Google Cache is a service provided by Google that generates duplicates of web pages and archives them in its database. This functionality enables users to access versions of web pages stored by Google, even in cases where the original page is temporarily inaccessible or has been removed.

When a user initiates a search query on Google, the search engine scans its index to locate pertinent pages. Instead of directly presenting the original page from the server, Google can furnish the user with a cached copy of that page from its archive.

Additionally, Google Cache serves the purpose of inspecting the most recently saved version of a page. If a webmaster updates a page’s content, and those changes have not yet been indexed by Google, users can consult the latest cached version of the page to observe the updates.

Google Cache proves beneficial for various practical uses, such as accessing pages during temporary site unavailability, observing alterations to pages, preserving page content for archival purposes, and more. It’s important to note, however, that cached page versions might be marginally outdated and may not consistently mirror the current state of the original page.

Now that we’ve grasped the fundamental concepts, let’s delve into understanding why pages might drop out of the index or remain unindexed.

Note: Google Cache doesn’t necessarily indicate a page’s indexing status. It’s a common misconception to assume otherwise. Frequently, a page may not be in the index, even if a copy of it is, and vice versa.

Why Pages Fall Out of Google Index or Aren’t Indexed

Duplicate Pages

Having duplicate pages on a website can result in indexing issues with search engines. When a site contains multiple identical or very similar pages, search robots may struggle to determine the main one and the one to display in search results.

This confusion can lead to search engines indexing only one of the duplicate pages, neglecting the others. Consequently, this may decrease overall site traffic and adversely impact its ranking in search results.

To mitigate this problem, it’s essential to take the following steps:

1. Utilize unique content for each page on the site.

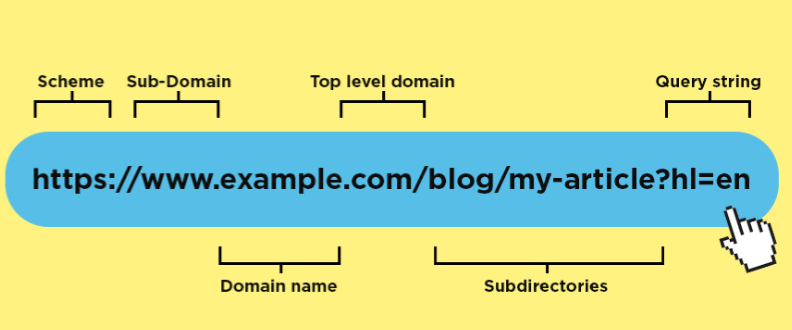

2. Implement a proper URL structure, ensuring each page has a unique ID.

3. When using duplicate content, link to the original page instead of creating copies.

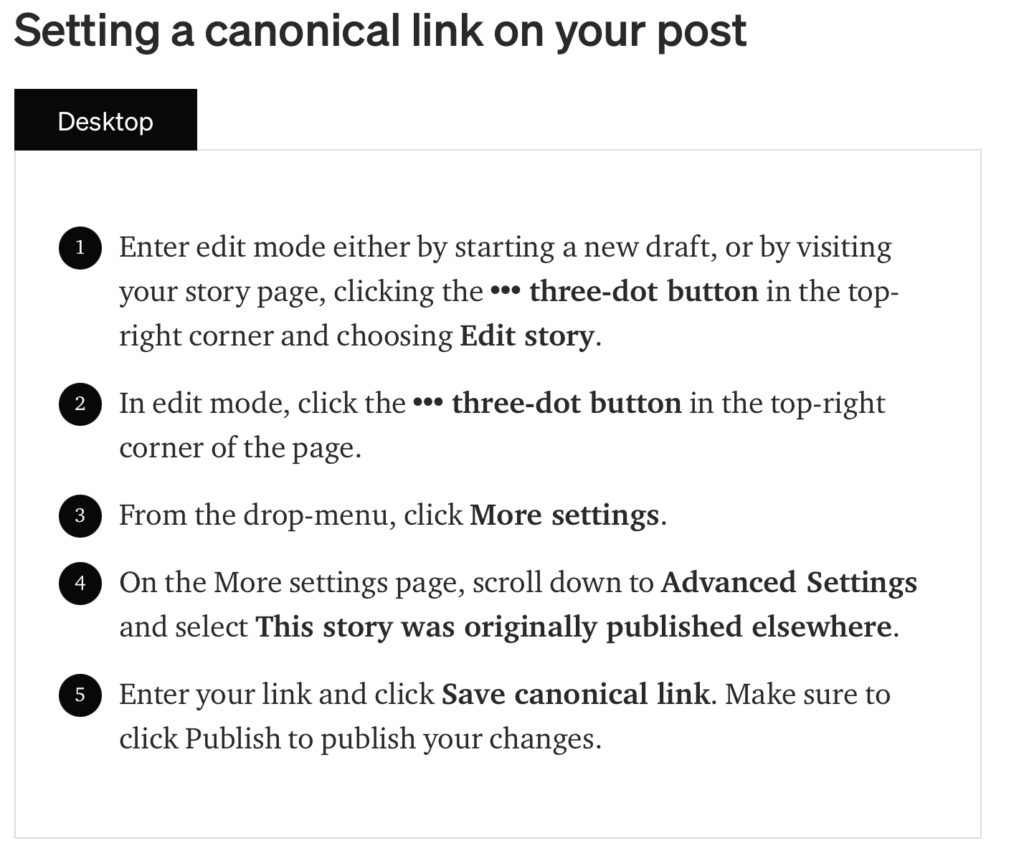

4. Employ rel=”canonical” tags to specify the original page when dealing with duplicate content on different pages.

5. Configure 30x redirects accurately.

6. Utilize robots.txt or the “noindex” meta tag to prevent the indexing of duplicate pages.

Incorrect Robots.txt Setting

Indexing issues in search engines can often be attributed to incorrectly configured rules specified in the robots.txt file. Here’s why:

- Incorrect or missing robots.txt file settings: Ensure your robots.txt file provides accurate instructions for crawlers. Verify the syntax and confirm that important pages or sections of the site are not inadvertently blocked from indexing.

- Blocking the entire site: If your robots.txt file includes the Disallow: / statement, it means you are completely preventing the entire site from being indexed. Rectify the error to allow search robots to index your site.

- Incorrect blocking of individual pages:Specific pages or sections of the site are mistakenly blocked in the robots.txt file. Constantly review your directives to ensure they are not erroneously preventing the indexing of desired pages, and adjust the corresponding rules.

- Transitions from an old version of a site: This is perhaps the most common error of all. If you’ve migrated to a new site version, your old robots.txt file might hinder the indexing of crucial pages on the new site. Confirm that your new robots.txt file does not include incorrect instructions specific to the updated site.

- Last step: After modifying the robots.txt file, ensure it is uploaded to the server and accessible in the correct path (/robots.txt). Additionally, be aware that changes to the robots.txt file may take some time to update on search engines, so anticipate that results may not be immediate.

Low Quality Pages

Remember Google’s ETA guidelines? Well, it was a big change and the effect continues till date in SEO. Nowadays, less useful content is often the reason for poor indexing. Here’s how you can go about it:

- Ensure your content stands out for its uniqueness and interest. If your page lacks these qualities, consider updating the content. Review the existing material and enhance it to make it more valuable and informative for users. Incorporate relevant information, elevate text quality, and deliver valuable, unique insights that cater to your visitors.

- Remove or conceal unnecessary content that serves no purpose, is outdated, or lacks demand. Utilize the “noindex” tag to prevent search engines from indexing such content. However, exercise caution not to hide material that could be beneficial to users.

- If your content feels oversaturated or over-optimized, consider dividing it into separate pages. This is particularly helpful if a page contains mixed or irrelevant content. By creating distinct pages with more specific topics, you assist search engines in better understanding each page’s focus, enabling more accurate indexing.

- Evaluate your use of keywords to avoid spam optimization. Strike the right balance by observing competitors in the TOP rankings and understanding the correct ratio for keyword usage. Overusing keywords can have adverse effects, so maintaining a balanced approach is key.

How to Improve Search Indexation For Your Website?

Add Counters GA, GSC.

Adding counters can be a reinforcing factor that will improve your site’s indexing. Knowing the search engine about the traffic on your site will give it an idea of which pages are important and should be added to the index.

No Duplicates

If your site has duplicate pages, search engines may ignore one of them or block both of them from being indexed. Add rel=”canonical” to each page of the site, configure the CNC and redirect correctly to avoid such problems.

Check The Site Health

- Site speed. If your site’s pages are too large and slow to load, search engines may stop indexing to avoid overloading their servers.

- HTTP Errors: If your site’s server returns HTTP errors, such as 404 (page not found) or 500 (internal server error), it can cause indexing to be blocked.

There are various SEO audit tools that can come to your rescue.

Include xml Sitemap

A sitemap is a file that contains the structure of your site and helps search engines understand which pages to index. To create a sitemap, you need to use XML format and indicate each page of the site in a separate element. For example, for the page “example.com/page1.html” the sitemap element would look like this:

<url> <loc>http://example.com/page1.html</loc> </url>

After generating your sitemap, upload it to the server and include a link to it in your robots.txt file. This step facilitates search engines in locating and indexing all the pages across your website. In the case of a sizable site with numerous pages, consider creating multiple sitemaps categorized by type or page classification. For instance, one sitemap could exclusively encompass blog pages, while another could focus on product pages.

Say Bye To Junk Pages

- Review search engine reports: Employ tools offered by search engines, such as Google Search Console, to obtain reports on pages that faced indexing issues or were identified as problematic.

- Examine analytics data: Utilize analytics tools like Google Analytics to identify pages with minimal or no traffic. Pages with very low traffic might be candidates for deletion, enhancing the uniqueness and value of your site.

- Leverage content analysis tools: Utilize tools like Screaming Frog and Sitebulb to analyze your site’s content and detect undesirable pages. These tools can highlight pages with insufficient content, duplicates, or other issues.

Consider Your Crawl Budget

Crawl budget determines the number and speed of pages that a search engine is willing to process and index on your site within a certain period of time.

Because search engines have limited resources, such as the time allocated to crawl sites and index them, crawl budgeting cannot be avoided. The search engine tries to optimize its resources to crawl and index important pages on your site that may be useful to users.

Here are some factors that can affect your crawl budget:

- Quality and relevance of content

- Site structure

- Quantity limits

- User behavior

Check All Links And Add Interlinks

Linking, or internal linking, is the process of creating links between pages on your site. Proper linking can significantly improve indexing as well as user experience. Internal linking helps search engines understand the structure of your site. Here’s a comprehensive article on all that you need to know about link building.

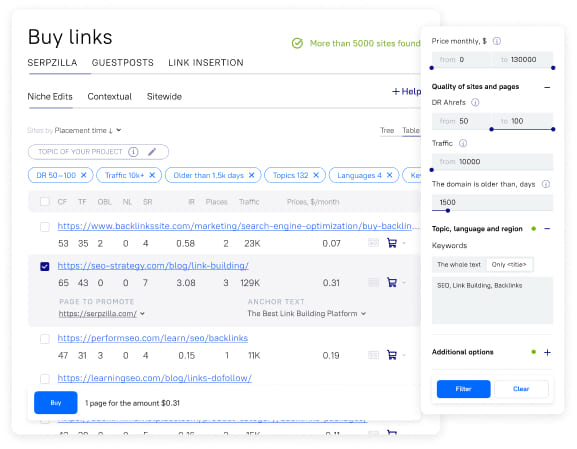

Provide External Links

The presence of external links on your website aids search engines in assessing the structure and quality of your content, thereby enhancing your site’s indexing. The emergence of new links serves as a signal to search engines, indicating the increased value of the page.

Utilize various types of links to impart a natural and diverse quality to your link profile.

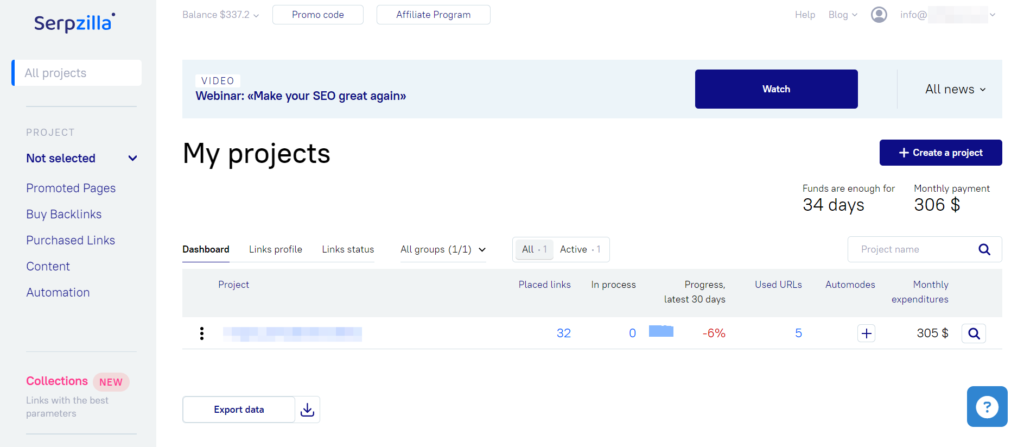

Boost your SEO results! Link building has become fast and easy with Serpzilla. Buy quality backlinks on authority websites with high DR.

Conclusion

Don’t be disheartened by the non-indexing of your web pages. What you do next is the critical step ahead. With the following pointers and tools such as Serpzilla, you’ll be better equipped to put your web page back on the Google index.

Need help with using our tool? Get in touch with our experts now!