Mastering Technical SEO: Best Practices for 2023

Technical SEO is the process of optimizing a website from a technical perspective to improve its ranking and visibility in search engines. It involves making changes to the website’s code, structure, and configuration to ensure that search engine crawlers can easily crawl and index the website’s content.

Technical SEO also entails optimizing website speed, mobile responsiveness, and ensuring that the website is secure and free of errors that could affect its performance in search engine rankings.

The goal of technical SEO is to improve a website’s search engine visibility, which can lead to increased traffic, engagement, and conversions.

The Importance of Technical SEO in 2023

Lately, there have been a lot of tweets

Time to look for a new job for some SEOs.

You spent your SEO life as "Technical SEO" and chasing goals that Google gave you as little carrots over a decade via "trusted influencers",

like

– page speed

– mobile friendlieness

– SSL

– and other technical implementation details… pic.twitter.com/QgoycCHcSO— Christoph C. Cemper 🧡 AIPRM (@cemper) April 22, 2023

and articles downplaying the role of technical SEO.

Source: Search Engine Land

However, in 2023, technical SEO is more important than ever before. With increasing competition among websites, search engines becoming more sophisticated, and content creation becoming easier with the advent of ChatGPT, technical SEO is crucial for ensuring a website’s success in organic search results.

A website with poor technical SEO can result in slow loading times, broken links, and poor user experience, which can cause visitors to leave the site and decrease engagement metrics. This can ultimately lead to a lower search engine ranking and less visibility in search engine results pages.

On the other hand, a website with strong technical SEO can improve the user experience, increase page speed, and make it easier for search engine crawlers to index the site’s content. This can lead to higher search engine rankings, increased organic traffic, and more opportunities for engagement and conversion.

In addition, technical SEO is also important for optimizing a website for mobile devices, which account for a significant portion of online searches. Ensuring a website is mobile-friendly and has fast loading times is crucial for improving its visibility and ranking in search engine results pages.

Site Architecture

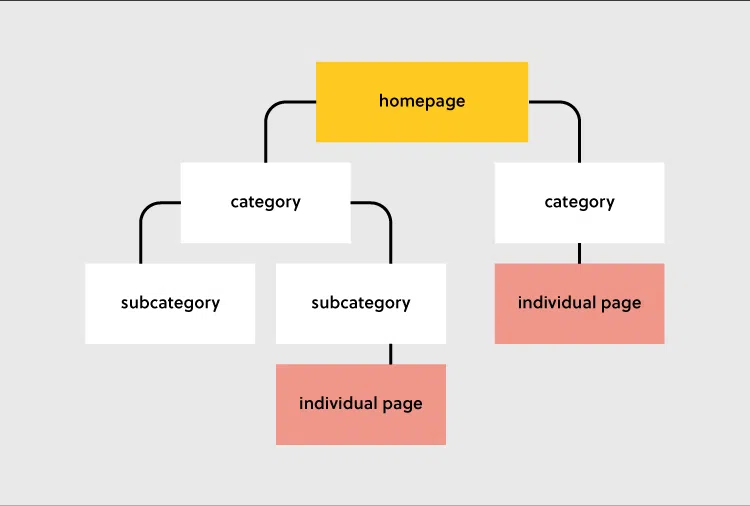

Site architecture refers to the way a website is organized, including the navigation, URL structure, and internal linking. An effective site architecture can have a significant impact on a website’s search engine ranking and visibility – and therefore, is crucial for technical SEO.

A well-organized site can make it easier for search engine crawlers to navigate a website and understand the relationships between pages. This ensures that all pages on the website are properly indexed and ranked.

Moreover, an effective site architecture improves your site’s UX by making it easier for visitors to find the information they’re looking for. This leads to higher engagement rates, and by extension, gets your site higher rankings.

Best practices for optimizing your site architecture

Create a clear hierarchy: Your website should have a clear hierarchy of pages that reflects the most important content on the website. This hierarchy should be reflected in the website’s navigation and URL structure.

Use descriptive URLs: URLs should be descriptive and include relevant keywords, making it easier for both users and search engines to understand the content of the page. Avoid using generic URLs such as “/page1” or “/post123”.

Make navigation logical: The navigation and information flow should be intuitive and easy to follow, with relevant categories and subcategories organized linearly or top-down as appropriate. The primary navigation should be limited to no more than 3 or 4 clicks.

Show breadcrumbs: Breadcrumbs are a secondary navigation system that displays the user’s path on the website. This can be helpful for users and search engines to understand the website’s hierarchy and where they are at the moment.

Use internal links liberally: Internal linking is an important aspect of site architecture. It is important to link related content together using relevant anchor text. This can help users and search engines to understand the relationships between pages.

Mobile Optimization

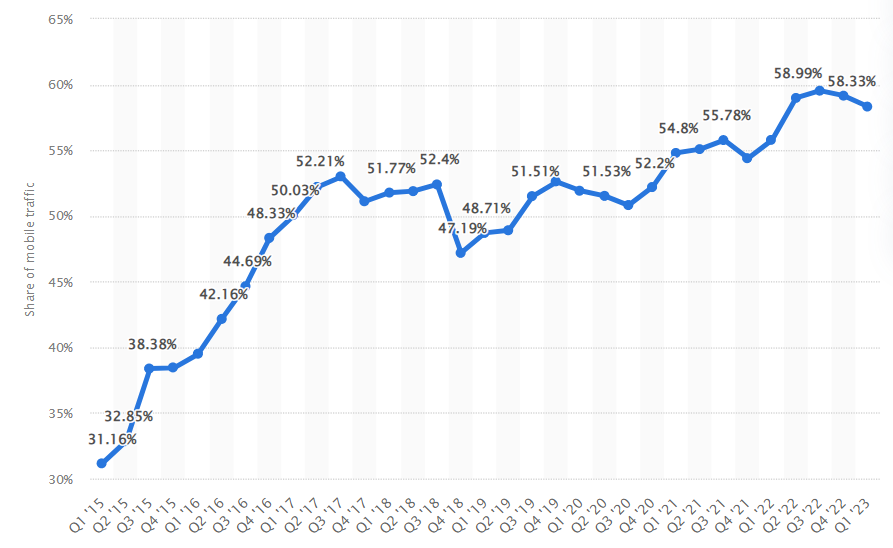

Mobile optimization is crucial for businesses because of the increasing number of people who use mobile devices to browse the internet. In 2022, mobile devices accounted for nearly 60% of website traffic worldwide, making it imperative for businesses to have a website that is optimized for mobile devices.

Source: Statista

A mobile-optimized website has faster load times, is easier to navigate, and is more readable, making it more appealing to users. Mobile optimization is particularly important for ecommerce businesses, as consumers increasingly make purchases using their mobile devices. According to a report by eMarketer, mobile commerce sales accounted for 72.9% of all e-commerce sales in 2021, and this figure is expected to continue to rise.

Best practices for optimizing your site for mobile devices

Mobile Responsiveness: Your website must be designed responsively to adapt to various screen sizes, resolutions, and orientations. This means that your website should automatically adjust to fit the user’s device screen, providing a seamless and consistent user experience.

Page Speed: Mobile users expect fast loading times, and a delay of just a few seconds can result in user frustration and abandonment. Optimize your website for faster loading times by compressing images, using caching, minimizing HTTP requests, and reducing the number of redirects.

Mobile-First Indexing: Google has been using mobile-first indexing since 2019, which means that the mobile version of the pages of your websites are preferred for indexing and ranking purposes over the desktop version.

Simplified Navigation: Navigation menus should be designed for mobile devices, with a clear hierarchy and fewer options to minimize clutter. Use dropdown menus or collapsible panels to conserve space while providing easy access to site content.

Optimize Content for Mobile: Use shorter paragraphs, larger font sizes, and easily readable fonts to make content more mobile-friendly. Avoid using too much text or media that may slow down page loading times or be displayed in unpredictable ways.

Test for Mobile: Before launching your website, test it on multiple devices and browsers to ensure that it functions smoothly and looks consistent across different platforms.

Site Speed

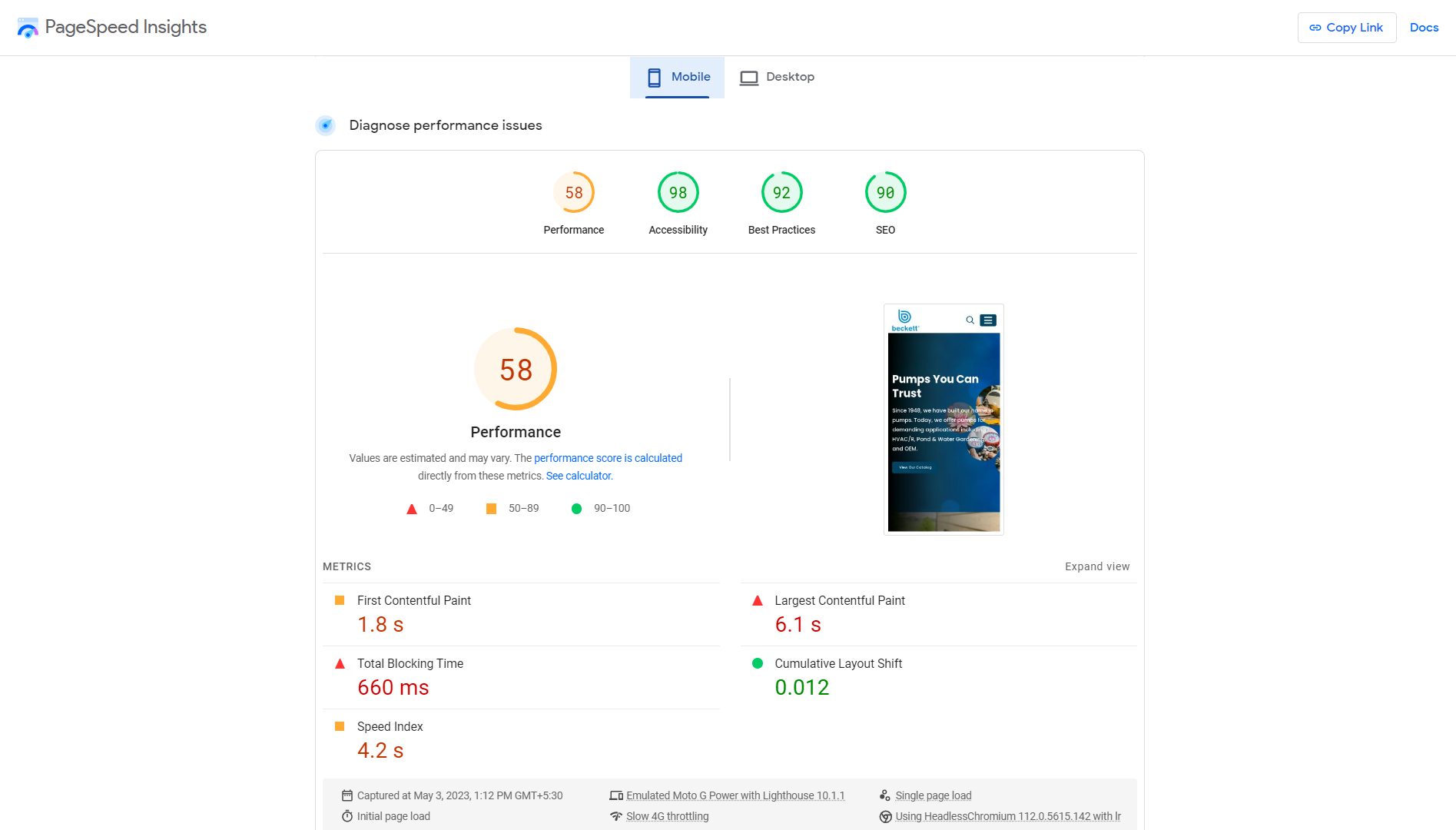

Site speed is itself an important factor in determining the success of a website. How fast your website loads and responds to user requests directly impacts your search engine rankings. Google provides its own speed checking tool PageSpeed Insights.

Page loading speed is important in three ways:

User Experience: Slow loading times and delayed responses frustrate users and lead to high bounce rates. Research has shown that users expect a website to load in under two seconds, and any delay beyond this can result in a significant loss of traffic and engagement. A faster website provides a better user experience, improves user satisfaction, and increases the likelihood of conversion.

Mobile Optimization: With mobile devices accounting for more than half of website traffic, site speed becomes even more crucial. Mobile users expect faster load times and may have limited data plans, making it essential to optimize site speed for mobile devices. A mobile-optimized website with fast loading times can improve engagement, conversion rates, and search engine rankings.

Competition: In today’s fast-paced digital world, website visitors have a short attention span, and a slow website can quickly lead to losing potential customers to competitors with faster websites. Therefore, site speed is a crucial aspect of staying competitive in the digital landscape.

Best practices for improving site speed

Optimize Images: Images are often the largest files on a webpage and can significantly slow down page loading times. Optimize images by compressing them without sacrificing quality, resizing them to the appropriate dimensions, and using the right image format. JPEG is generally best for photographs, while PNG is better for graphics and logos.

Minimize HTTP Requests: Each time a webpage is loaded, the browser sends a request to the server for each element on the page – such as images, scripts, and stylesheets. Minimizing the number of HTTP requests can improve page loading times. Combine multiple CSS and JavaScript files into a single file, use CSS sprites to combine multiple images into a single image, and reduce the number of third-party scripts and plugins used on the website.

Minify CSS and JavaScript Files: Minifying is the process of removing all unnecessary characters from CSS and JavaScript files, such as white space, comments, and line breaks. This reduces file size and improves page loading times. Use minification tools or plugins to minify CSS and JavaScript files.

Enable Caching: Browser caching allows the browser to store previously loaded web pages and reuse them instead of requesting them again from the server. This reduces page loading times and server load. Use caching tools or plugins to enable browser caching.

Use a Content Delivery Network (CDN): A CDN is a network of servers located in different geographic locations that can store and deliver website content more efficiently to users. This reduces page loading times by delivering content from the server closest to the user. Consider using a CDN to improve site speed.

Structured Data

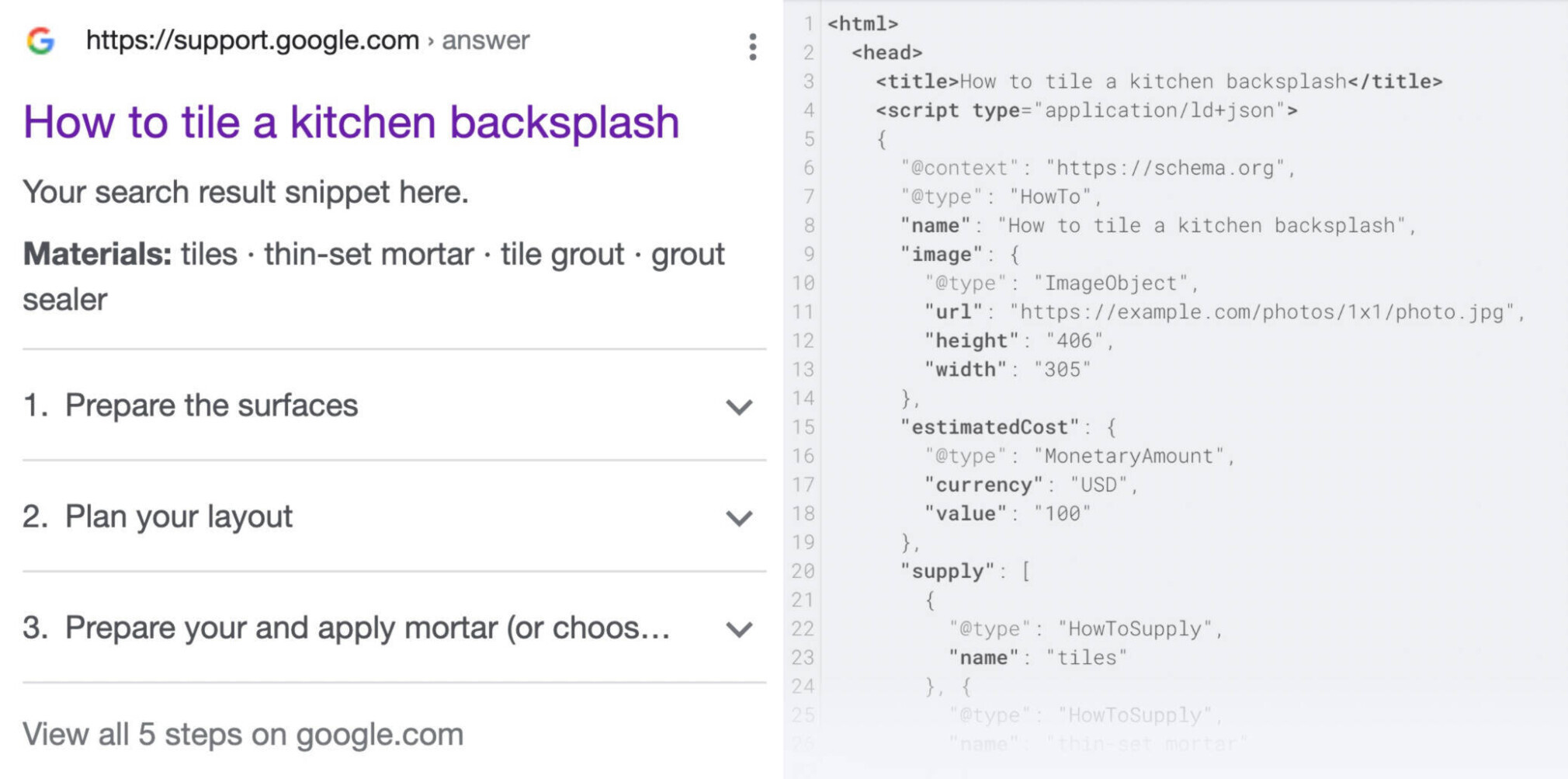

Structured data refers to a specific format for organizing and presenting data on the web in a way that is easily understood by search engines. It involves using a standardized set of coding formats, such as Schema.org or JSON-LD, to describe the content and context of web pages, making it easier for search engines to crawl and understand the content.

Structured data can include information such as product details, reviews, recipes, events, and more. This enables search engines to present more relevant and informative search results to users, with the following benefits:

Improved Crawling and Indexing: By providing structured data on a webpage, search engines can more easily crawl and index the content, ensuring that it appears in relevant search results. This is particularly important for larger websites or those with complex content structures, as it can help search engines identify and prioritize important pages.

Enhanced Visibility: Structured data can cause rich results and featured snippets to appear in search results, including additional information such as reviews, images, and pricing information. This gives you more real estate in the SERPs and increases your click-through rates.

Voice Search: With the increasing use of voice search, you can get your site to appear in relevant voice search results by providing structured data that is optimized for voice search queries.

Best practices for implementing structured data

Use Schema Markup: Schema markup is a standardized format for describing the content on a webpage using structured data. Use Schema.org to identify the most relevant data types for your website, such as product details, reviews, recipes, events, and more. Use the appropriate Schema markup to provide detailed information about your content, which can help search engines better understand and display your content in search results.

Implement Open Graph (OG) Protocol: The OG Protocol is a type of structured data used by Facebook and other social media platforms to extract information from web pages to display rich media content in social media posts. Implement Open Graph tags on your website to provide Facebook and other social networks with information about your content, such as images, descriptions, and titles.

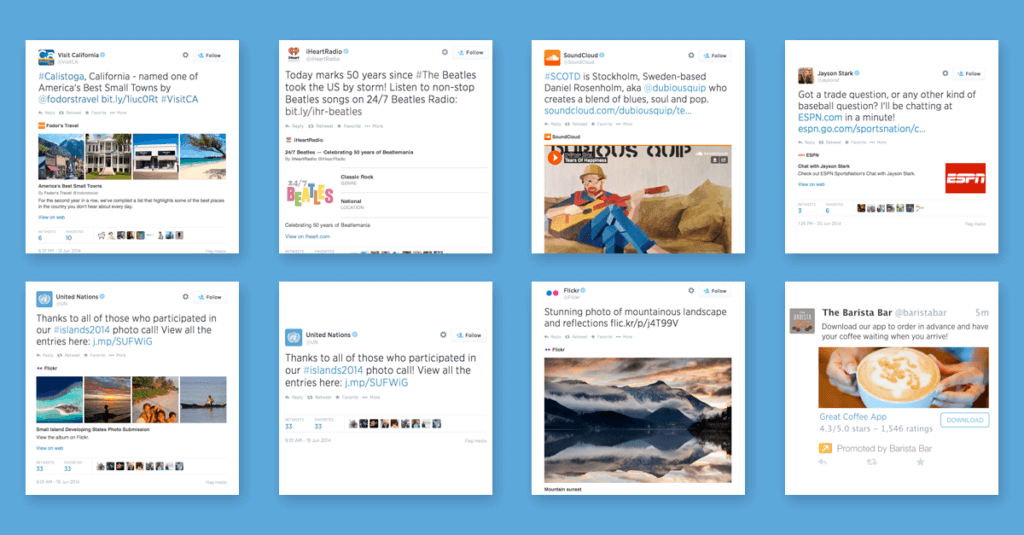

Use Twitter Cards: Twitter Cards are another type of structured data used to display rich media content in tweets. Implement Twitter Card markup on your website to provide Twitter with information about your content, such as images, descriptions, and titles, which can help improve the appearance of your tweets and increase engagement.

Keep Structured Data Up to Date: Ensure that your structured data is accurate and up to date. Regularly review your structured data markup to ensure that it reflects the current content on your website. This will help to ensure that your content is being displayed correctly in search results, and will also help to improve the user experience.

Test Structured Data: Use Rich Results Test or Schema Markup Validator or other similar tools to test your structured data markup to ensure that it is being correctly recognized by search engines. Fix any errors or warnings that pop up.

Security

Security plays a significant role in ensuring that a website is trustworthy and safe for users to visit. Security measures such as SSL certificates and HTTPS protocols encrypt the communication between the user’s browser and the website, which helps protect user data from unauthorized access.

A secure website also leads to higher levels of trust and credibility and confidence in the website’s functionality, which ultimately results in better user engagement and conversions.

Google has made it clear that security is a ranking factor for search results. Websites that implement HTTPS are extremely likely to rank higher than those that don’t.

On the other hand, websites that are not properly secured can be vulnerable to malware infections. Once they’re breached, search engines flag them as unsafe, and their rankings and traffic go out the window.

Best practices for securing your website

HTTPS: HTTPS is a secure version of the HTTP protocol, which encrypts all communication between the website and the user’s browser. Websites should use HTTPS protocols to secure user data and improve their search engine rankings. For example, if you visit your bank’s website, you should see “https://” at the beginning of the URL, indicating that the website uses HTTPS protocols to secure your data.

SSL Certificate: Secure Sockets Layer (SSL) certificates are digital certificates that validate the identity of a website and encrypt the data that is transmitted between the website and the user’s browser. Websites should use SSL certificates to protect user data and prevent unauthorized access. For example, if you visit a website that uses an SSL certificate, you will see a padlock icon in the address bar of your browser, indicating that the connection is secure.

Two-Factor Authentication: Two-factor authentication is a security measure that requires users to provide two forms of identification to access an account. This helps to prevent unauthorized access to the website and protects user data. For example, when you log in to your bank’s website, you might be required to enter a password and then enter a unique verification code that is sent to your phone or email address.

Regular Updates and Maintenance: Websites should be regularly updated with the latest security patches and software updates to prevent vulnerabilities from being exploited. Additionally, regular security audits and maintenance can help identify and address potential security risks. For example, if your website is built on WordPress, you should regularly update WordPress itself as well as all plugins to the latest version to ensure all vulnerabilities are patched with the latest security updates.

Strong Password Policies: Websites should require users to create strong, complex passwords that are difficult to guess. Additionally, passwords should be changed regularly, and users should be encouraged to use password managers to store their login information.

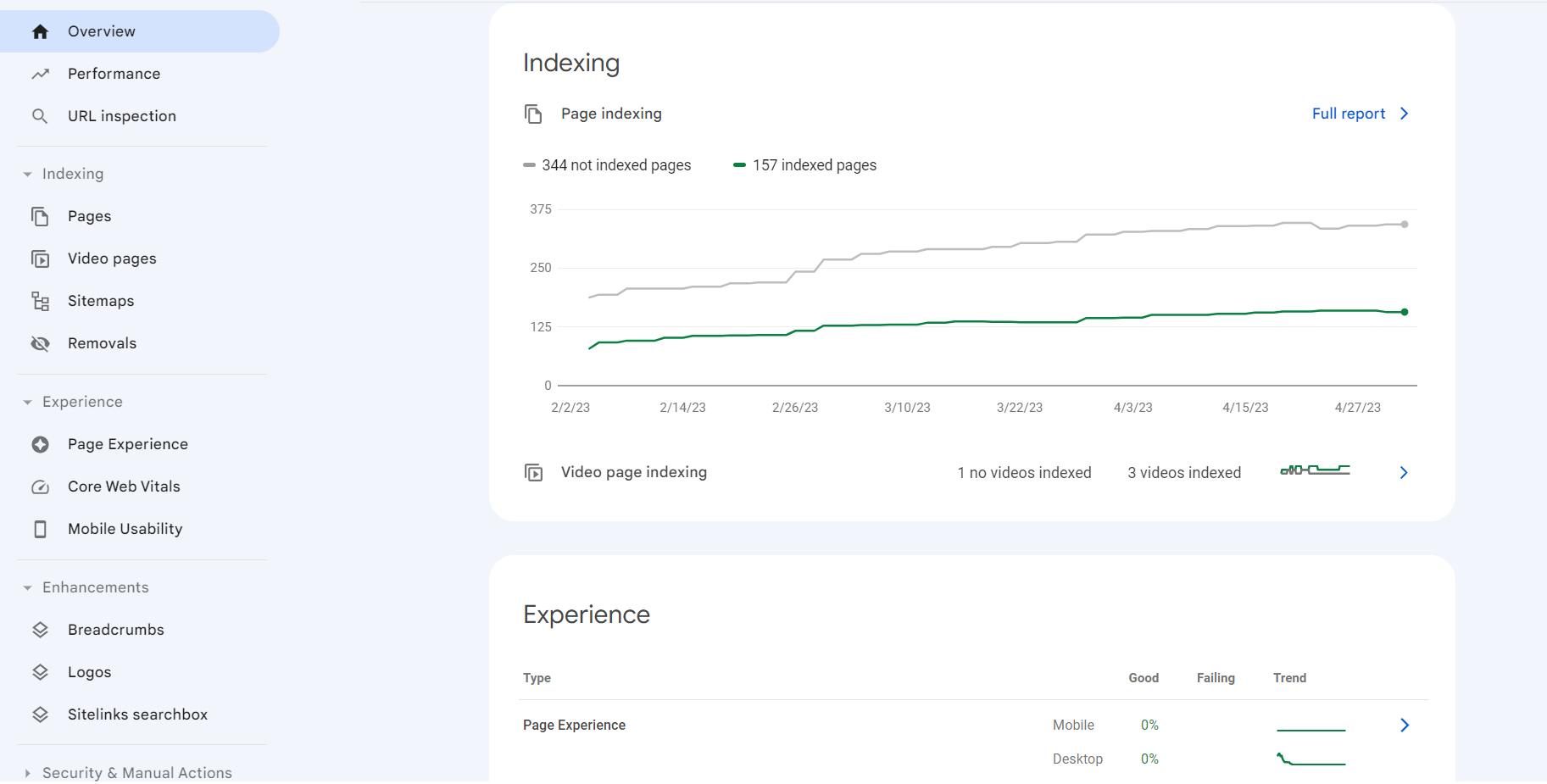

Crawling and Indexing

Crawling refers to the process of a search engine’s software, commonly known as a “spider” or “bot”, automatically discovering, scanning, accessing and rendering all pages on a website. This process allows the search engine to identify and understand the content of each page, as well as any links between pages.

Crawling is important because it allows search engines to identify and index new pages, update existing content, and detect broken links or errors. By ensuring that all of your web pages are crawled, you make sure that your content is accessible to search engines and that any new content is discovered and indexed quickly.

Indexing refers to the process of storing and organizing the information collected during the crawling process. This information is stored in a search engine’s database (known as an “index”) and is used to generate search results when a user conducts a search query.

Indexing determines whether a page will appear in the SERPs, how relevant it is to the query, and at what position it will rank. You need to optimize the content and structure of your website for indexing.

Best practices to get your site crawled and indexed

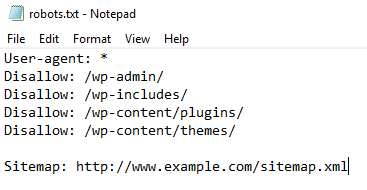

Robots.txt: The robots.txt file is a text file that tells search engines which pages or sections of a website should not be crawled or indexed. By using the robots.txt file, you can make sure search engines don’t crawl duplicate content, protected sections, and pages that are not relevant to search queries you don’t want to target.

Sitemap.xml: A sitemap is an XML file that lists all the pages on a website and provides information about each page’s content, importance, and last modification date. By submitting a sitemap to Google Search Console, you can ensure that all of your pages are discovered quickly and efficiently.

Canonical tags: A canonical tag is an HTML element that tells search engines which version of a page is the “original” or “preferred” version. It looks like this:

<link rel=”canonical” href=”https://example.com/example-page”/>

By using canonical tags, you can avoid duplicate content issues that can harm your site’s rankings.

Other best practices for crawling and indexing include optimizing the structure and information flow of your website, avoiding duplicate content, using descriptive and unique meta tags, and making good use of internal links.

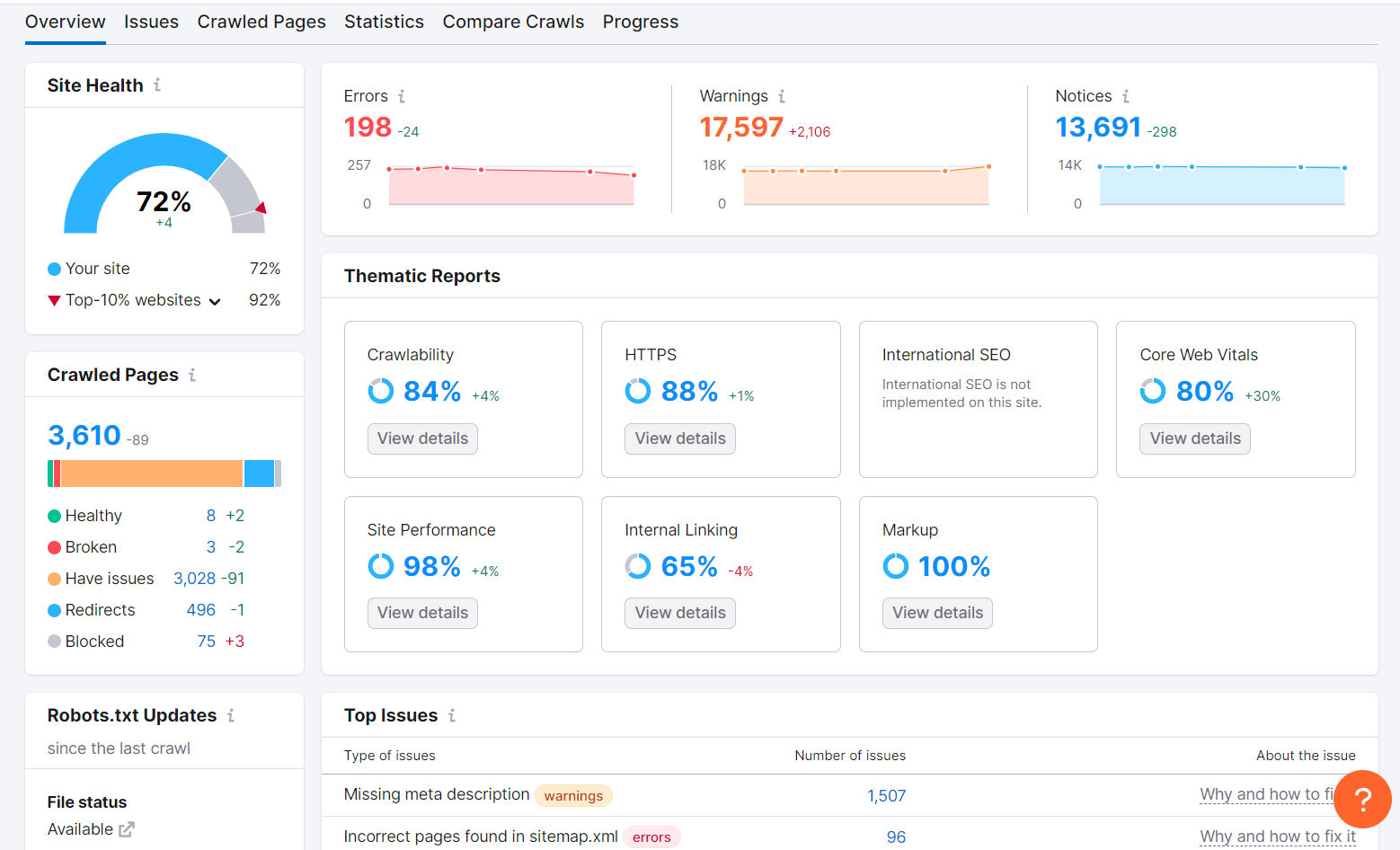

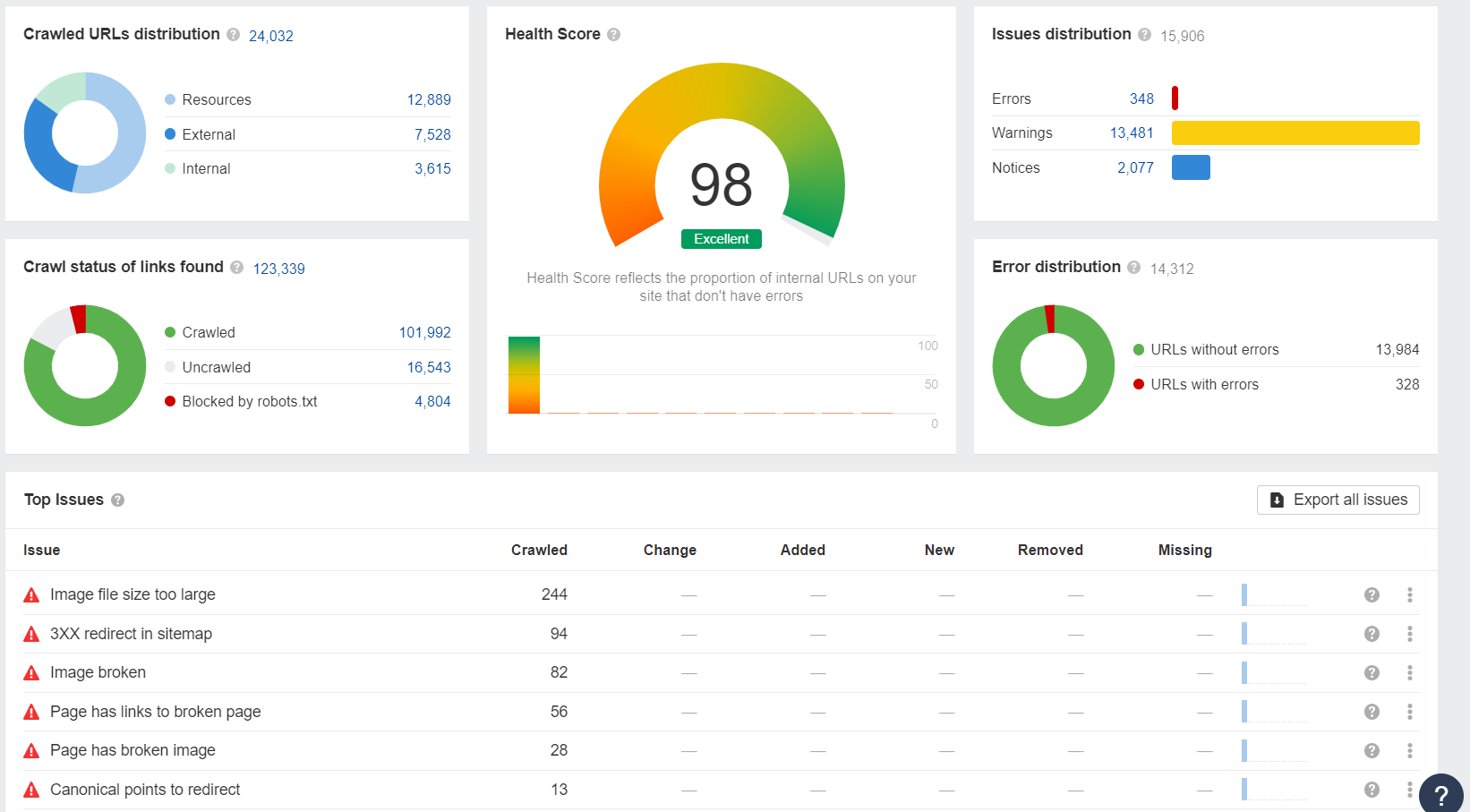

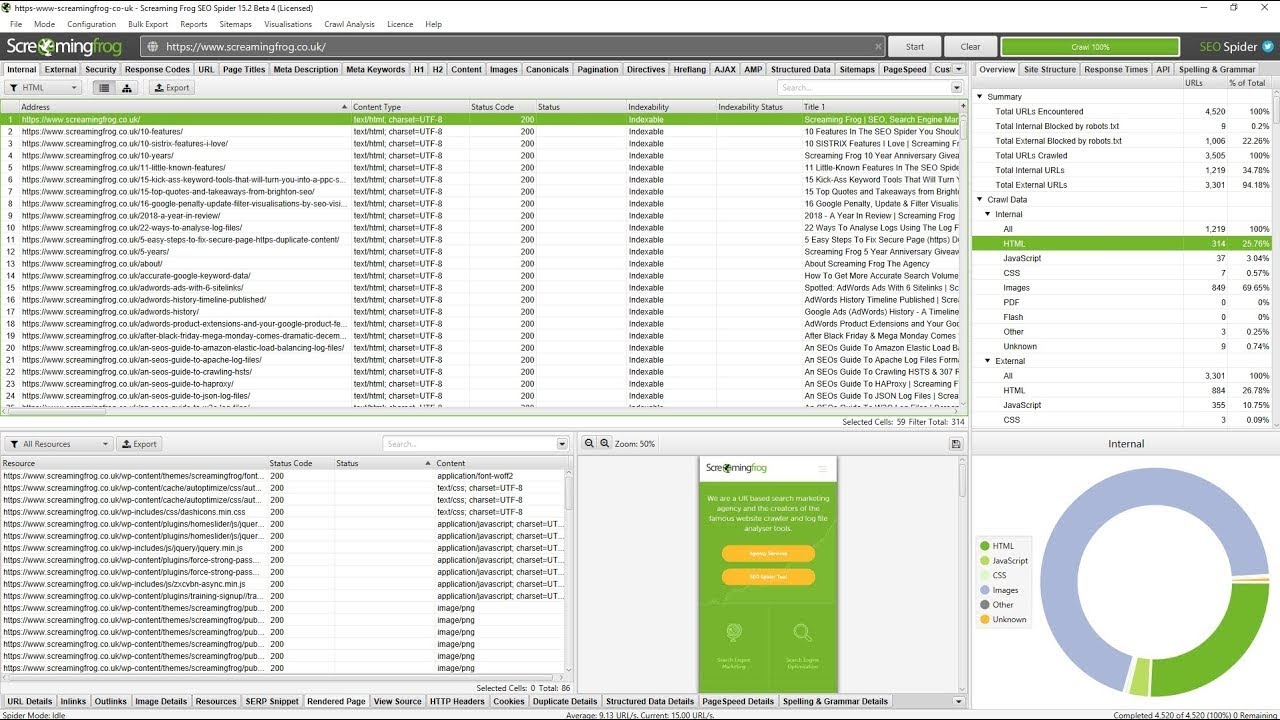

Technical SEO Tools

Technical SEO tools are designed to help website owners and marketers optimize their website’s technical elements to improve rankings and user experience. Some of the most popular Technical SEO tools are:

The Future of Technical SEO

As web technology continues to advance and search engines become more intelligent, technical SEO will continue to evolve and become even more important in the coming years. Search engines will make more use of AI and machine learning (ML) to better analyze and understand websites. And they will continue to rank pages that provide a great UX higher up in the search results.

Once you come up with a technical SEO strategy that takes into account these two key trends, it’s just a matter of conforming to best practices. SEO success will come repeatedly to you!