For years, link builders have used topical relevance as a kind of intuition-based metric. We looked at categories, scanned headlines, checked anchor text, and hoped Google would “get the idea.” If a site felt related, or if we could concoct at least some kind of instant connection it was good enough, especially if the DR was high.

Well, this time is very over.

In 2025–2026, relevance is no longer inferred indirectly. It’s read, interpreted, and evaluated directly by Large Language Models. Google’s ranking systems increasingly rely on LLM-style understanding to determine what a page is about, which entities it meaningfully covers, and whether two pieces of content belong to the same semantic neighborhood. Links now act less like votes and more like contextual evidence.

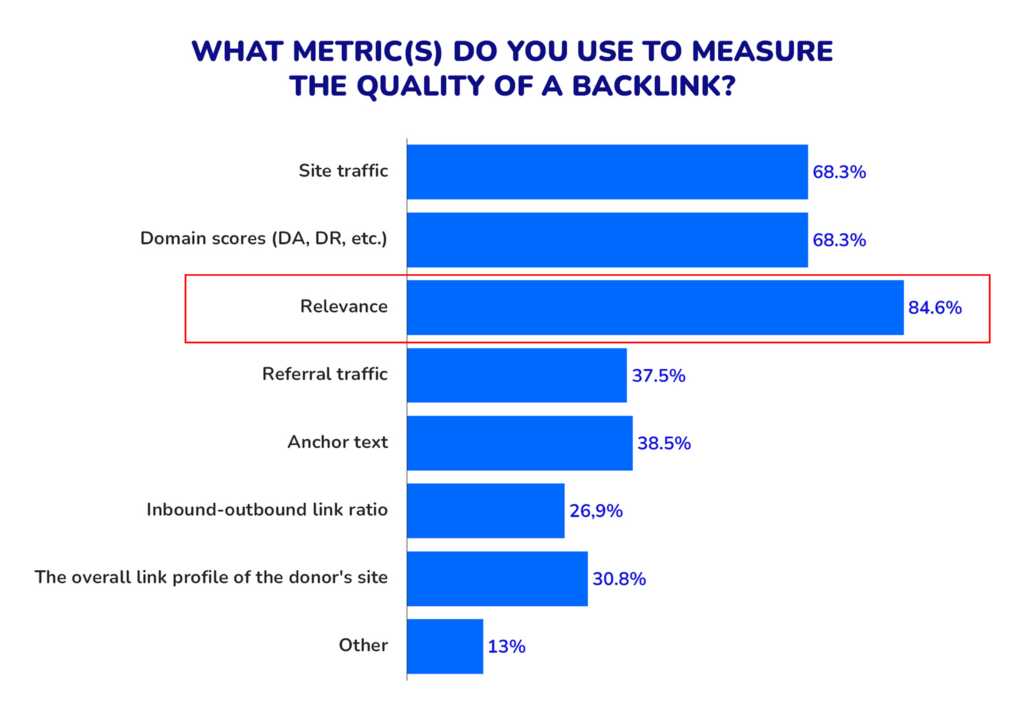

The image below is an answer from a 2025 survey of SEO specialists:

What is LLM-Driven Topic Affinity

This shift has created a new practical problem for SEO teams:

How do you verify topical compatibility before placing a link, not by metrics, but by meaning?

This is where LLM-driven topic affinity comes in.

Unlike classic relevance checks (category match, keyword overlap, DR filtering), LLM-based affinity analysis asks a more fundamental question:

Can this link placement site meaningfully “talk” about the same things as the acceptor? Can it use the same concepts, entities, and explanatory depth?

If the answer is yes, the link reinforces semantic authority.

If not, even a DR 70 placement can be neutral at best or actively confusing at worst.

In this article, we’ll break down how topic affinity works from an LLM perspective, how you can test it using simple, practical methods (even without deep technical expertise), and why tools like Serpzilla Smart Topic are becoming essential for modern link qualification.

Simple LLM-Based Methods to Evaluate Topical Relevance (Hands-On Checklist)

You don’t need to build your own model or run complex NLP pipelines to check topic affinity. Modern LLMs already “think” the way search engines increasingly do — through meaning, entities, and explanatory coherence. The trick is asking the right questions.

Below is a practical, checklist-style framework you can use today to evaluate donor–acceptor relevance through an LLM lens.

1. The “Explain It Naturally” Test

Goal: Check whether the link placement can organically support the linked content topic.

How to do it

Take a representative article from the link placement site and ask an LLM:

“Based on this article, can this website naturally explain [your core topic] without sounding forced or off-topic?”

What to look for

✅ The model produces a coherent, structured explanation

✅ It uses correct terminology without overgeneralizing

❌ It struggles, gives vague filler, or shifts to adjacent but different topics

Example

- Acceptor: Online casino compliance in the EU

- Donor: General tech news blog

If the LLM drifts into “digital transformation” or “AI trends” instead of legal frameworks, regulators, or licensing bodies, low affinity.

2. Shared Entity Check

Goal: Validate overlap at the entity level, not just keywords.

How to do it

Ask the LLM:

“List the main entities discussed in this article.”

Then compare them to entities central to your acceptor content.

Strong signals

✅ Same or closely related entities appear repeatedly

✅ Entities are used in explanatory roles, not just mentions

Weak signals

❌ Entity overlap exists only at a generic level

❌ Entities appear once or twice with no context

Hands-on tip

If the link placement site regularly explains entities like regulators, platforms, standards, tools, or methodologies relevant to your niche, it’s usually a good semantic match.

3. Contextual Linking Simulation

Goal: Predict whether the link will feel semantically justified.

How to do it

Ask the LLM:

“Suggest a natural paragraph where a link to [acceptor topic] would fit in this article.”

Results analysis

✅ Link fits naturally inside an explanatory block

✅ Anchor text is descriptive, not forced

❌ The model inserts the link as an aside or disclaimer

If the link needs verbal gymnastics to exist, Google will feel that too.

How Serpzilla Helps You Find “Semantically Correct” Donor Sites

Manually checking topic affinity with LLM prompts works, but it breaks the moment you move beyond a handful of prospects. Real link building operates at scale, and this is exactly where semantic mismatch usually sneaks in: teams filter by DR, traffic, language, country and assume relevance will follow.

With Serpzilla, the logic is reversed.

You don’t hope relevance exists, you filter for it upfront.

Below is how Serpzilla operationalizes semantic relevance in real workflows.

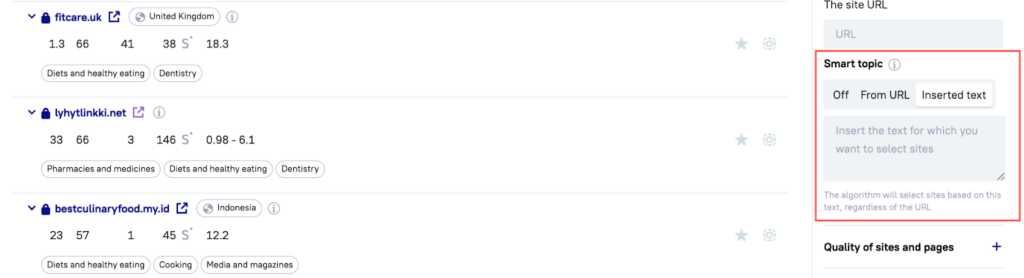

1. Smart Topic: Filtering by Meaning, Not Labels

At the core is Smart Topic — Serpzilla’s AI-driven topical classification.

Instead of relying on:

- site categories,

- self-declared niches,

- or keyword lists,

Smart Topic analyzes actual published content and assigns sites to semantic topic clusters.

What this gives you

- Link prospects grouped by what they consistently write about

- Separation between “occasionally mentions” and “topical focus”

- Cleaner shortlists for niche and sub-niche campaigns

Why it matters

Two sites can both rank for “SEO tools,” but only one consistently explains analytics, methodologies, and workflows. Smart Topic sees that difference.

2. Topic Affinity Between Link Placement Site and Linked Content

Serpzilla doesn’t just classify sites — it helps you match them correctly.

Using Smart Topic, you can:

- Select donor link prospects whose primary topic aligns with your acceptor

- Exclude sites where the topic overlap is accidental or marginal

- Build link lists that reinforce one semantic narrative

This is crucial for:

- niche and expert content,

- YMYL-adjacent projects,

- authority-driven strategies (not volume-based).

3. Filtering Out “False Positives” with High DR

One of the most common problems in link building is semantic noise from strong but unfocused sites.

Serpzilla helps eliminate:

- general media with scattered topics,

- tech blogs that publish “everything for everyone,”

- sites with high DR but no topical depth.

By combining Smart Topic + DR + traffic filters, you can keep authority only where it supports meaning.

4. Semantic Consistency Across a Link Campaign

LLMs evaluate not just individual links, but patterns.

Serpzilla enables you to:

- build link prospect pools from the same or adjacent topic clusters,

- maintain consistent semantic signals across dozens of placements,

- avoid “topic hopping” that weakens perceived expertise.

This is especially important for:

- long-form expert guides,

- product category pages,

- AI Overview–oriented content.

Conclusion

Amidst the LLM-driven search that we work with today, topical relevance is no longer a soft signal or a matter of intuition, it’s something search systems actively understand and evaluate. Finding “semantically correct” link placement sites means aligning with the same topics, entities, and explanatory depth your content is built around. By using tools like Serpzilla Smart Topic, teams can move from guessing relevance to engineering it deliberately and building link profiles that reinforce authority in a way both users and LLMs recognize as credible.